There probably is a more elegant description for what I am proposing, but to me the best way I can explain what I am envisioning is this:

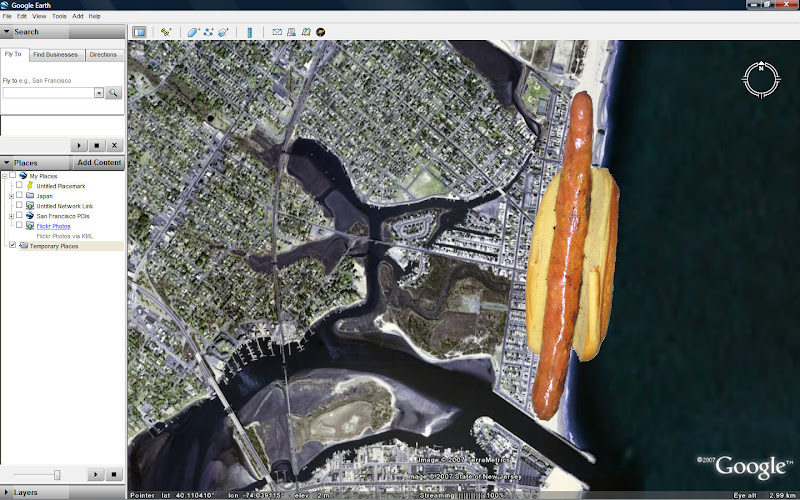

An Immersive Hotdog is a georeferenced linear collection of immersive photographs which create a seamless immersive environment which can be entered in a Geospatial Exploration System such as Google Earth, Microsoft Virtual Earth, ESRI ArcGIS Explorer, or NASA's WorldWind. The shape of these immersive collections roughly resembles a hotdog.

Imagine if you will, a long hotdog-shaped object lying on the Earth - about 100 feet wide, 100 feet tall, and about a mile long.

Now imagine that hotdog-shaped object is lying on the ground in GOOGLE Earth - and it is textured with real ground photography taken from the center of the hotdog.

This is the Immersive Hotdog - the source can be from a video camera collection system such as Immersive Media, or from standard immersive photograph collection systems such as iPIX.

These collections have existed in stand alone viewers for years, however we haven’t visualized this hotdog yet in a truly 3D environment and I think we should.

So I started a little proof of concept experiment on Christmas Eve.

I didn’t have a fancy Immersive Media video camera, but I did have an iPIX kit which I borrowed to begin exploring this problem.

So, on Christmas Eve I walked down the boardwalk in Manasquan, NJ and took a series of iPIX shots every few houses (and brought along my new trusty QSTAR Solar GPS Bluetooth Data logger to capture the precise location of each shot.)

I then returned home and synced the GPS log from the QSTAR logger up to the iPIX fisheye photos using the awesome Google Picture Sync (GPicSync) application.

Here are the results:

(144 geotagged Raw iPIX Fisheye shots which you can download with full GPS EXIF data here)

((Hint: Use the "Download Album" option on the left side to directly download all images to your own Picasa))

((( Because Google's PicasaWeb supports EXIF data, you can view all of these images on a Google Map here)

Each iPIX shot consists of 2 Fisheye shots (180º opposed to one another) which we need to stitch into one Equirectangular image

Here’s the first problem - each picture is taken at a slightly different time from the corresponding shot so the GPS position wavers a little bit in that time.

Thats actually a good thing, because what we can do is average out the 2 positions for the pair and find an averaged, perhaps more accurate position somewhere “between” both shots to apply to the single equirectangular shot that we get when we stitch the photos together.

For example, the 2 shots “exploded” in Google Earth above are only slightly “off” from each other in Lat and Lon, but we would average their positions and apply it to the resulting image below. (The iPIX software currently does NOT offer this option…so the first challenge is to average both positions from the source images and write the average to the EXIF of the resulting image.)

(72 Equirectangular Images can be downloaded here.)

I am thinking that some script could be created with Phil Harvey's EXIF Tool to automatically strip the 2 source GPS EXIF locations and average them into the corresponding equirectangular shot?

We can then take the Equirectangular image and wrap around the Spherical <PhotoOverlay> object in KML 2.2 - which will form the first “end of the hotdog.”

The best way that I know how to do that today is to use the PhotoOverlay Tool from Digital Urban.

We can continue to do this with the successive scenes….

So, if we continue to do this, we begin to “fill the Hotdog.”

To do this we would need a way to automate the PhotoOverlay tool (it does have a batch process capability), but we also need to be able to define the rotation angle so that the spheres line up properly on the earth.

Also, many of the spheres I create with the PhotoOverlay tool are way to large. i.e the FOV Near setting is too big.

Now, the Immersive Hotdog is limited in Google Earth because we are restricted as of right now to use multiple sphere objects, which turns out something more like a pea-pod than a hotdog.

But, there has been some fantastic development by Microsoft as of late with their photo stitching (HD View, Microsoft Live Photo Gallery, PhotoSynth, Birdseye 3D, etc.)

Maybe their developers would have a solution to not only successively build the sphere objects but actually stitch the scenes together seamlessly and create true immersive hotdog objects?

Or, maybe someone clever out there has an idea on how to do this?

Please let me know!

No comments:

Post a Comment