As Hurricane Sandy looms off the eastern seaboard, posed to cause a massive incident in the United States, one of the largest concerns is for prolonged power outages, and possible connectivity outages due to downed towers and utility lines.

No Power + No Internet = No Internet Based Maps..... Unless..

Thanks to the awesome TileMill software by MapBox, I was able to generate tiles for the entire North Eastern United States down to level 15, in beautiful and useful detail in about 9 hours. You can see the extent of the cut below.

I've tried to scope the cut to the areas most likely to be impacted by the exceptionally large Sandy based on NOAA's projected storm path.

MapBox allows tile sets to be exported in the open MBTiles packaging format, which is a SQLITE database that contains the tiles and any metadata.

Anyone that might be working in the field, or working with first responders in the impact area might want to download this dataset for your use if you lose power and or internet connectivity during the storm and the likely prolonged response and recovery period.

I can't vouch for the total accuracy of the data or the rendering of that data since it is entirely sourced from OpenStreetMap and MapBox's OSM Bright styling, and take no responsibility for your use of the data.

You can download the 16GB MBTiles package here.

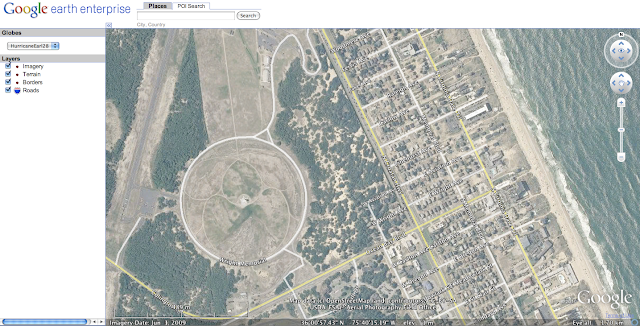

Here are a few example screenshots of the level of detail provided in this tile set in various locations in the northeast.

New York, NY

Washington, DC

Boston, MA

Manasquan, NJ

Since the entire package for the northeast is ~16GB - it is too large to put on an iPad or other mobile device, but, the tiles are generated and it is easy to dump the tiles from MBTiles to a flat directory structure, or serve them on a local area network, or on your laptop and then view with a viewer like OpenLayers.

There might also be utilities to cut smaller MBTiles packages from this one; if anyone knows of something like that, please leave a comment, or if it doesn't exist...build it!.

A simple way to dump MBTiles packages to flat directory structures (/Zoom/X/Y.png) for use in slippy maps, is MapBox's MBUtil.

MBUtil is a simple python app that reads the MBTiles SQLITE database, and can export to a directory.

Use is dead simple:

python mb-util Sandy_OSMBright_North_East_US_15.mbtiles /somedirectory

Also, Michal Migurski's TileStache will directly serve an MBTiles dataset without needing to inflate the package to tiles on disk, it just takes a little configuration to do so.

An alternative to this approach for Android users, is to use Offline Google Maps. You can easily save offline maps for Sandy's impact area in the Google Maps application while you still have an internet connection.