As of 0800 this morning, there is a chance that Hurricane Earl will glance the Eastern Seaboard of the United States, possibly as a Major Hurricane.

About a month ago, I created a screencast and blog post about the new Google Earth Enterprise Portable system and how we had prepared a Google Earth Enterprise globe of 1-meter NAIP imagery and OpenStreetMap.org vector data for the Gulf and Atlantic coasts of the United States in preparation of an active 2010 hurricane season.

The portable cutting works very well and it took just over 16 minutes to create a complete copy of the Google Earth globe that a first responder would want to take into the field with them should Earl make landfall and knock out communications infrastructure.

To review, this is how simple it is to create a Google Earth Enterprise Portable globe for field deployment.

1. Prepare to Cut

The Google Earth Enterprise software includes a web-based cutter application which asks you to name the globe you'd like to create, specify a spatial extent, and provide a brief description.

In this case, I'm using the NOAA National Hurricane Center's 72 Hour Cone of Uncertainty as my spatial extent. This means that the cutter will cut from the globe at relatively low resolution outside the cone, and at full resolution inside the cone.

2. Cut

The next step is to hit the "Build" button and wait while the portable globe is generated.

The cutting operation created a 4.61 gigabyte globe in just over 16 minutes. As the graphic illustrates, almost all of the Outer Banks in North Carolina and parts of the sound intersected the cone of uncertainty, so this globe will have the complete raster and vector data for these areas in full resolution.

3. Download and Serve

Once the globe is created, it is available to the entire organization or access group of the administrator's choosing and the single .glb file can be downloaded to run on Windows, Mac, or Linux desktop computers or laptops.

The globe can be either used locally by 1 user, or broadcast over a local area network for 10 users to share - all without any connectivity to the internet or external networks.

The .glb file can be instantly dropped into the Google Earth Portable "Globes" folder and the browser-based interface will allow you to select this or any other .glb file you have on your system. You can connect to the globe either with the Google Earth API browser plugin (by clicking "view in browser") or you can view the globe in the full Google Earth Enterprise Client by connecting to the localhost server.

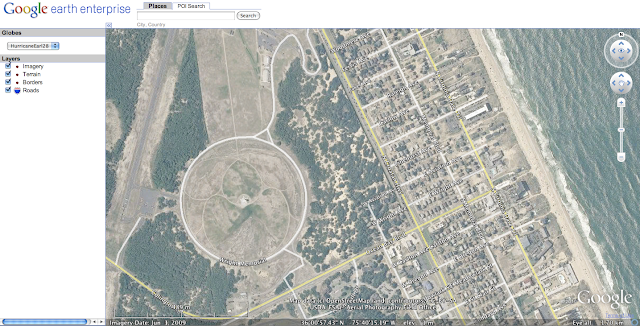

Shown in the browser plugin, you can see how areas to the north and west of the area of interest are blurry and lack vector data, but areas where Hurricane Earl might make landfall are in full resolution.

Zooming in, you can see the detail of the cut imagery and vector data which would be available to anyone in the field.

For a more detailed discussion about this process, feel free to review the previously posted screencast about Google Earth Enterprise Portable and Hurricane Preparedness here:

If you have any questions, please contact us at google-hurricane-2010@googlegroups.com .